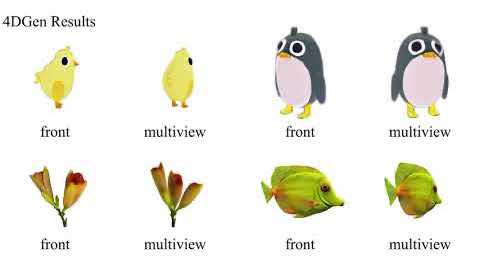

Diffusion4D: Fast Spatial-temporal Consistent 4D Generation via Video Diffusion Models

Hanwen Liang*, Yuyang Yin*, Dejia Xu, and 5 more authors

In Annual Conference on Neural Information Processing Systems, 2024

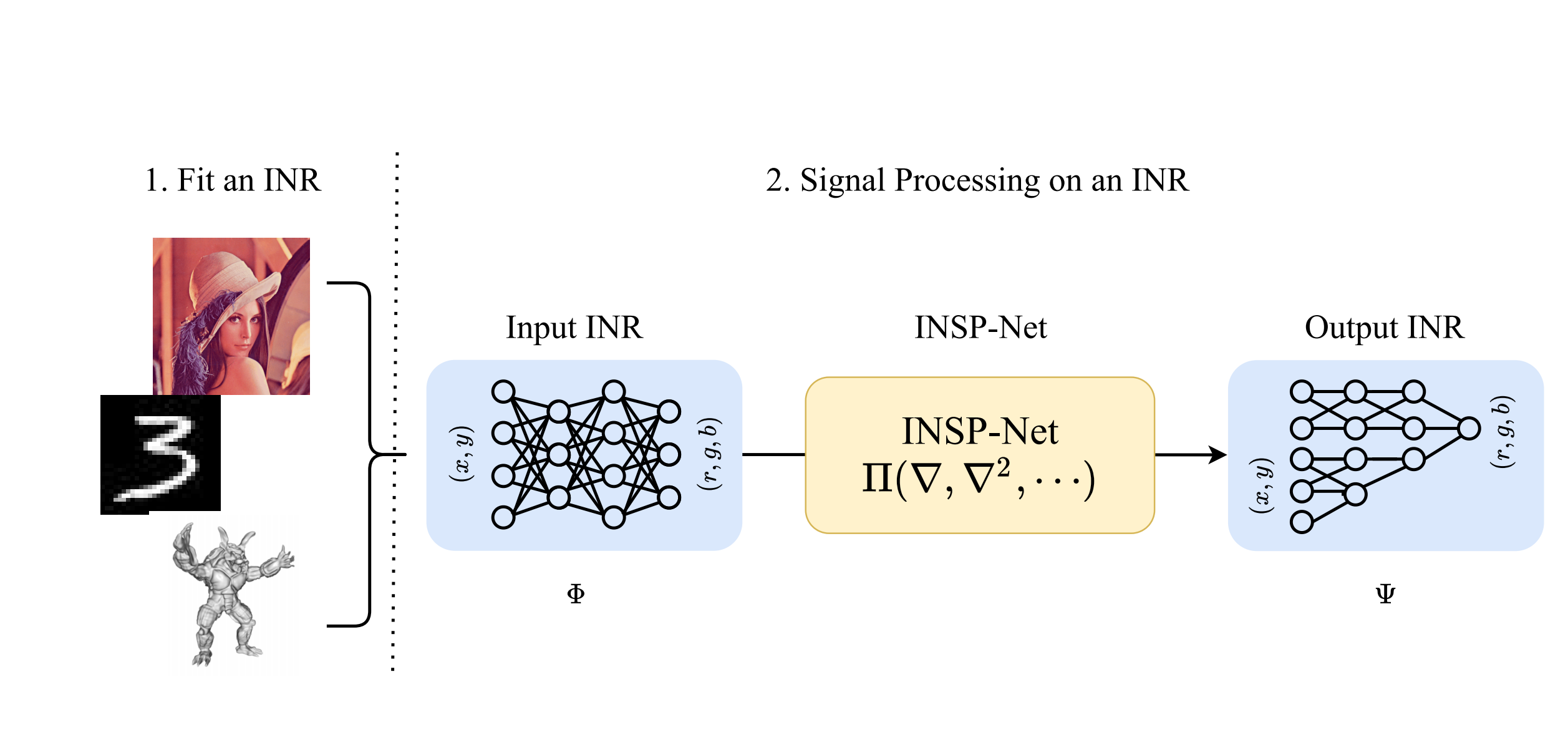

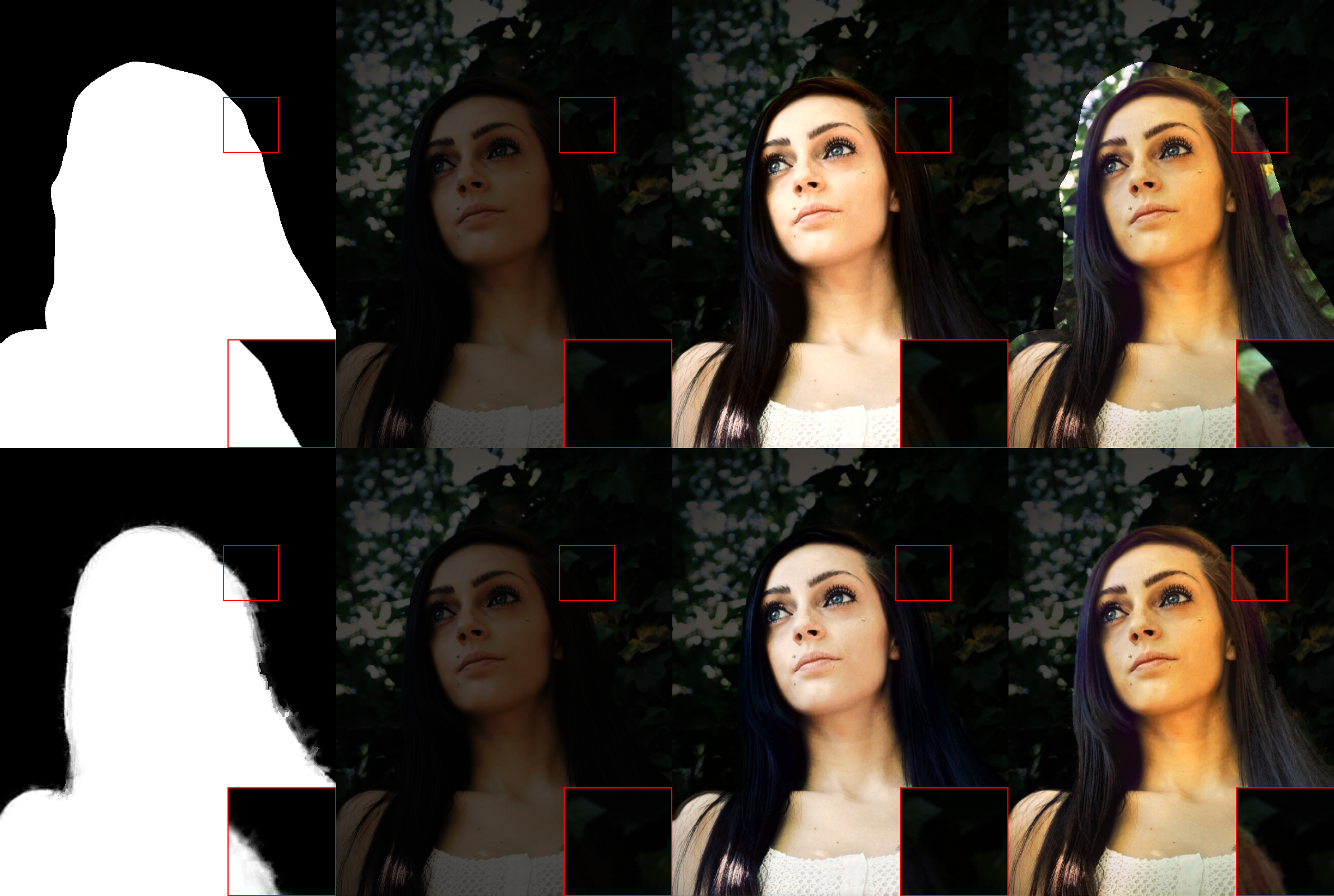

We present a novel 4D content generation framework, Diffusion4D, that, for the first time, adapts video diffusion models for explicit synthesis of spatial-temporal consistent novel views of 4D assets. The 4D-aware video diffusion model can seamlessly integrate with the off-the-shelf modern 4D construction pipelines to efficiently create 4D content within several minutes.